ONNX Runtime is an open source machine learning inference engine focused on running the widely adopted Open Neural Network Exchange (ONNX) format models with best performance.

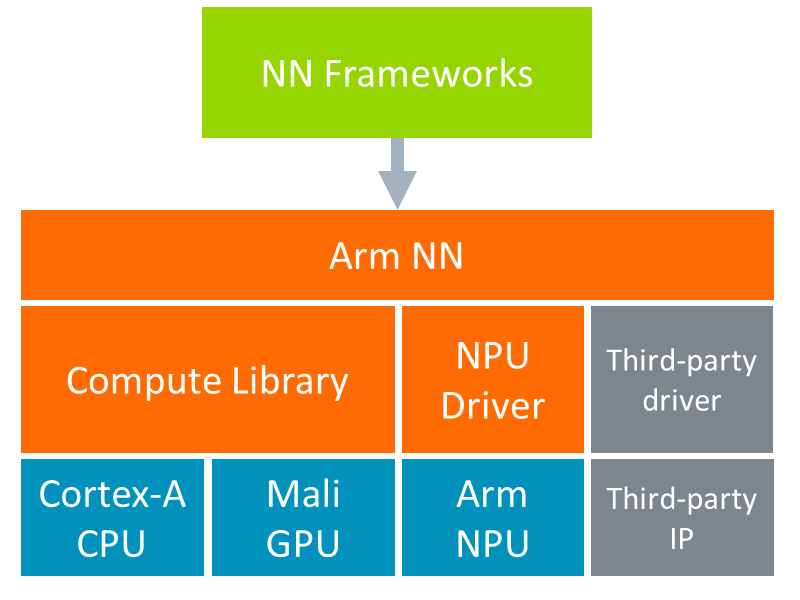

This session will introduce the ONNX format and the ONNX Runtime project and will provide details about the new Compute Library and ArmNN execution providers for Arm architecture recently accepted upstrem, specific optimizations and future work.

Join Mike Craman from NXP as he takes us through.

Session Speaker

Mike Caraman - Machine Learning Architect, NXP (NXP Semiconductors)

Mihai (Mike) Caraman PhD is a machine learning architect for application processors and microcontrollers at NXP Semiconductors. He earned a bachelor’s degree in Mathematics and Informatics in ‘97 with a thesis on artificial neural networks. After a 10 years detour in the beautiful world of virtualization, with contributions to the Linux kernel open source project, definition of next-gen networking SoCs, presentations at KVM Forum and Embedded World conferences, he returned to his first love. He is interested in everything related to machine learning, in particular deep learning, from hardware accelerators, neural networks architectures, optimizations and security, frameworks, exchange formats, edge and cloud service blending.